Creating a Conversational Data Analyst Chatbot using LangChain, OpenAI, Google BigQuery and Google Cloud Functions

In our previous post on Automating Insights and Dashboard Report-Level Narratives using Vertex AI Gemini 1.5-Flash, Looker Studio and Google BigQuery BQML I showed how generative AI could be used to add commentary and action points to individual charts and tables in a Looker Studio report.

In this post I’ll show how we’ve combined the thinking and reasoning part of generative AI with conversational, chatbot-style interface to create a “proof of concept” AI-powered data analyst website plugin, able to answer questions about KPIs and trends within your data warehouse and with the ability to understand the context and meaning in the questions your users ask of it.

To create this conversational data analyst chatbot we created a simple Javascript client front-end that collects users’ questions and sends them, via a REST API interface to a Google Cloud Function that, under-the-covers, uses LangChain and OpenAI’s GPT-4-Turbo LLM to query a Google BigQuery cloud data warehouse.

When clicked, the chatbot starts by asking if you’d like to ask a question about your data, for example by asking what was our total revenue in May 2024 and pressing the “go” button. After first responding with “thinking…”, around twenty seconds later the chatbot comes back with an answer, to which you can either respond with further questions or press the close (“x”) button to end the chatbot session.

To make those questions and answers conversational, the Javascript front-end remembers the answers given the user within each chat session and then adds them to the user’s question when sending them to the LLM for processing, so that if you follow-up question asks “how does this compare to same time last year” it understands that “this” is a reference to revenue and “same time last year” is a reference to May 2023.

To answer these questions the chatbot sends SQL queries to our Google BigQuery data warehouse in which we’ve created a set of summary tables specifically designed to answer questions around monthly performance KPIs, sales and lead generation activity and data on sessions and page views for our company website.

So how did we put this together? If you’re interested in seeing the code and maybe running it yourself there’s a Github repo with instructions on how to deploy to Google Cloud, and of course if you’d like us to deploy or build something similar for your organization then check-out the section at the end of this post on how to contact us.

At a high-level though there’s two main parts to this conversational data analyst chatbot:

The back-end, written in Python and running as a serverless Google Cloud Function in Google Cloud Platform, that receives questions from a front-end service and sends-back answers for that front-end to process and display

The front-end, which can be as simple as a cURL command such as the one below:

curl -X POST -H "Content-Type: application/json" \

--data '{"question":"and what was it in April 2024?"}' \

https://YOUR_REGION-YOUR_PROJECT_ID.cloudfunctions.net/ra-databot

or as shown in the diagram below, could be a Javascript + CSS web page chatbot dialog that shows those questions and answers in a conversational, messaging app format.

So how does the front-end client and back-end service work?

1. The chatbot dialog is displayed when the user presses a chatbot button that triggers the display of the chatbot dialog.

2. The user types in a question into the chatbot’s “type question here” text box, for example “How much were our sales in May 2024?”

3. Javascript within that chatbot plugin then sends the question, together with any previous answers to that user’s question in this particular chat session, to the back-end service’s REST API like this:

fetch('https://YOUR_REGION-YOUR_PROJECT_ID.cloudfunctions.net/ra-databot', requestOptions)

.then((response) => response.text())

.then((data) => {

console.log('Received data:', data);

tempBubble.innerHTML = data; // Replace the temporary bubble content with the actual response

chatHistory += `Bot: ${data}\n`; // Append bot response to chat history

})

.catch((error) => {

console.error('Error:', error);

tempBubble.innerHTML = 'Sorry, I could not get the answer. Please try again later.';

});

}

});

4. The back-end service takes this incoming question and prepends the following prompt to the user’s question:

“You are a knowledgeable data analyst working for Rittman Analytics. Answer questions correctly, do not delete or alter any data and provide concise (no more than 10 words) commentary and analysis where appropriate. Use the

ra-development.analytics_wide.monthly_company_metrics for monthly summary KPI questions,

ra-development.analytics_wide.sales_leads for questions about sales leads,

ra-development.analytics_wide.website_traffic for questions about website performance,

ra-development.analytics_wide.sales_deals for sales pipeline and sales activity questions

to answer this question, and no other tables. Do not include markdown-style triple backticks in the SQL you generate and try to use or validate. Question is:”

5. This prompt prepended to the user’s question is then passed to LangChain, a framework designed to simplify the integration of large language models (LLMs) like OpenAI's GPT-4-Turbo with various data sources and tools, enabling the creation of sophisticated applications that leverage natural language understanding and generation capabilities. LangChain acts as a bridge between the language model and the data sources/tools and provides abstractions and utilities to connect the LLM with various tools, including databases, APIs, and other services, as we do in our back-end code:

from google.cloud import bigquery

from sqlalchemy import *

from sqlalchemy.engine import create_engine

from sqlalchemy.schema import *

import os

from langchain.agents import create_sql_agent

from langchain.agents.agent_toolkits import SQLDatabaseToolkit

from langchain.sql_database import SQLDatabase

from langchain_openai import ChatOpenAI

from langchain.agents import AgentExecutor

from config.config import *

project = os.environ["GCP_PROJECT"]

dataset = os.environ["BQ_DATASET"]

gcp_credentials = os.environ["GCP_CREDENTIALS"]

open_ai_model = os.environ["OPEN_AI_MODEL"]

sqlalchemy_url = f'bigquery://{project}/{dataset}?credentials_base64={gcp_credentials}'

db = SQLDatabase.from_uri(sqlalchemy_url)

llm = ChatOpenAI(

model=open_ai_model,

temperature=0,

max_tokens=None,

timeout=None,

max_retries=2

)

toolkit = SQLDatabaseToolkit(db=db, llm=llm)

agent_executor = create_sql_agent(

llm=llm,

toolkit=toolkit,

verbose=True,

max_iterations=20,

top_k=1000,

agent_type="tool-calling"

)

Instruction = "..."

6. LangChain's SQL Agent is designed to translate natural language queries into SQL queries by leveraging the capabilities of, for example, OpenAI’s GPT-4-Turbo LLM in order to understand the user's question and generate the appropriate SQL query.

7. The Tools within LangChain include connectors to cloud data warehouse such as Google BigQuery, enabling the execution of SQL queries and retrieval of data. Those tool invocations first read the database data dictionary, then choose the most suitable table or tables to query, then sample those tables’ contents and then write, test and then execute the correct SQL query to return the answer to the user’s query

8. The results from the cloud data warehouse are processed and formatted and the GPT-4-Turbo LLM can further refine the results, providing a natural-language explanation or summary if needed.

9. Those results are then sent-back to the Javascript app plugin as the REST API response and then displayed in the chatbot UI as the answer to the users’ question.

10. If the user asks further questions as follow-ups to their first question, the Chatbot javascript app appends the results of previous questions in this chat to those follow-up questions so that the LLM has the context of the conversation available to it when formulating its answer.

In-practice developing and deploying a Cloud Function needs a means to store and provide as required the API keys and credentials needed to access the Open AI API and the BigQuery data warehouse and the readme file within our Github repo explains how we use Google Cloud’s Secrets Manager to do this.

TODO on the development list includes securing the back-end’s REST API interface so that it can only be accessed by a service account with the right IAM permissions granted, and to enable the front-end client to pass the user’s ID along with their question so that answers are limited to just the data they’re allowed to see.

We also configure the Cloud Function to have at least one instance running at any one time so that users don’t have to wait around for python libraries to load-up if the function has to do a cold start; longer-term we’d look into running the back-end code as a service deployed via Cloud Run if that works-out faster and cheaper to run than as a Cloud Function.

INTERESTED? FIND OUT MORE!

Rittman Analytics is a boutique data analytics consultancy that helps ambitious, digital-native businesses scale-up their approach to data, analytics and generative AI.

We’re authorised delivery partners for Google Cloud along with Oracle, Segment, Cube, Dagster, Preset, dbt Labs and Fivetran and are experts at helping you design an analytics solution that’s right for your organisation’s needs, use-cases and budget and working with you and your data team to successfully implement it.

If you’re looking for some help and assistance with your AI initiative or would just like to talk shop and share ideas and thoughts on what’s going on in your organisation and the wider data analytics world, contact us now to organise a 100%-free, no-obligation call — we’d love to hear from you!

Recommended Posts

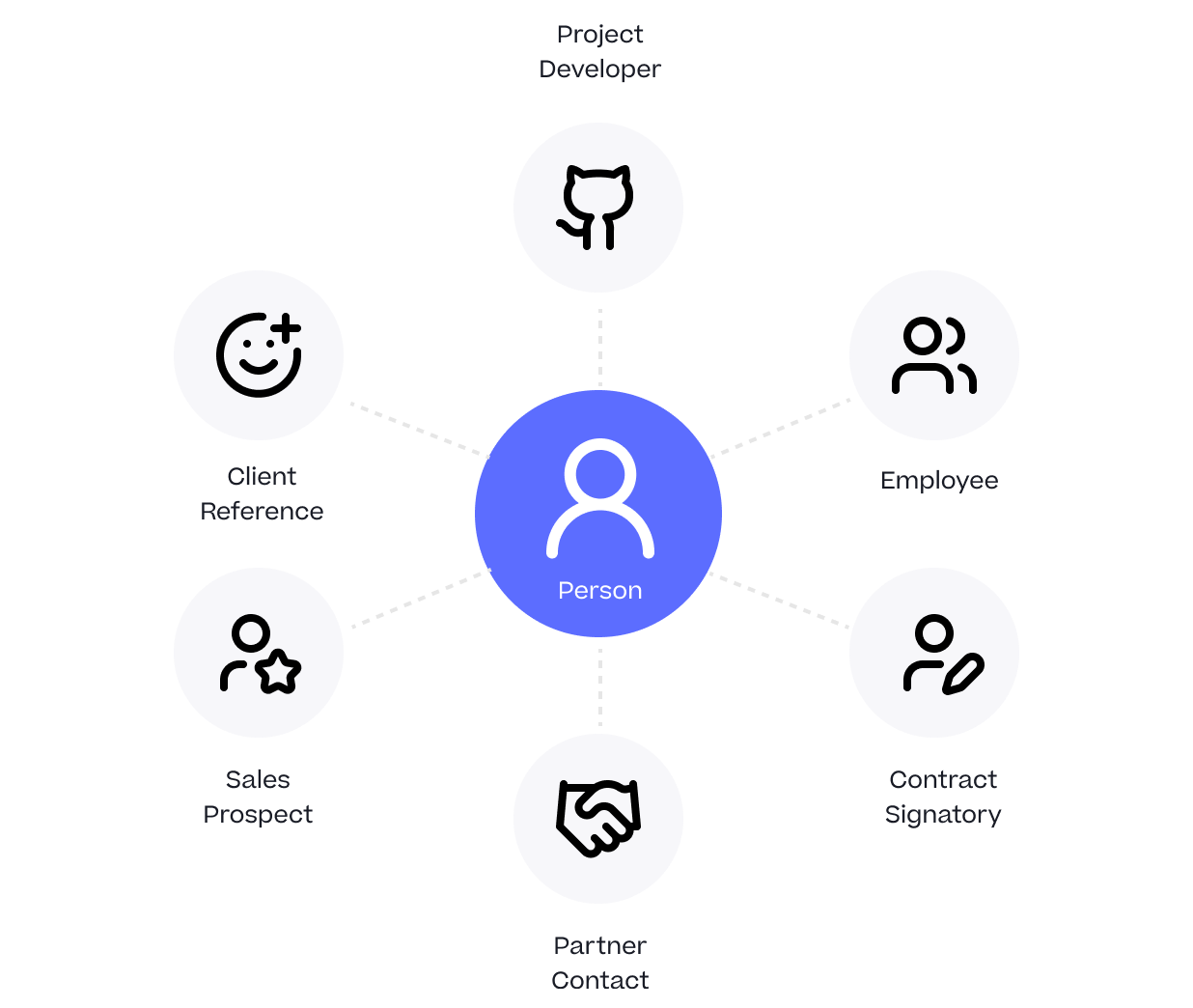

One Person Many Roles: Designing a Unified Person Dimension in Google BigQuery

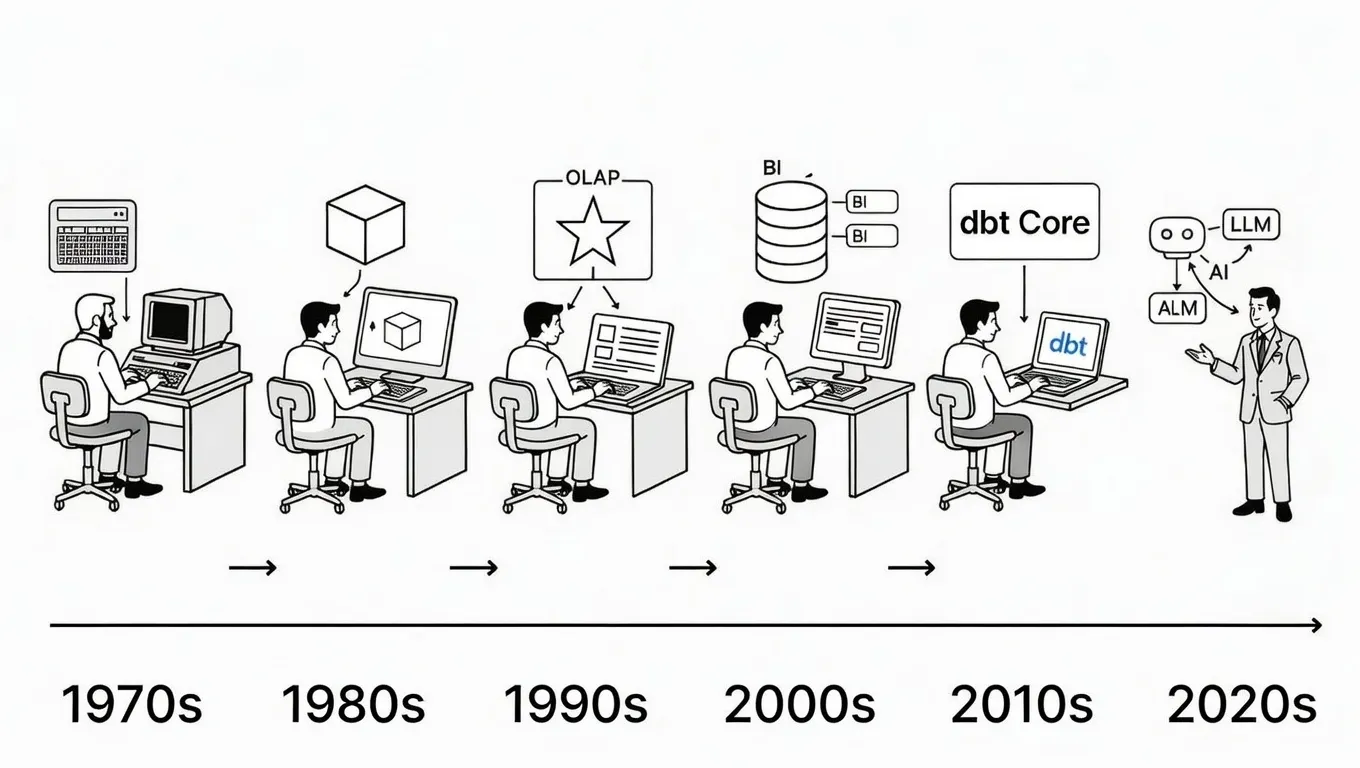

Why We’ve Tried to Replace Data Analytics Developers Every Decade Since 1974

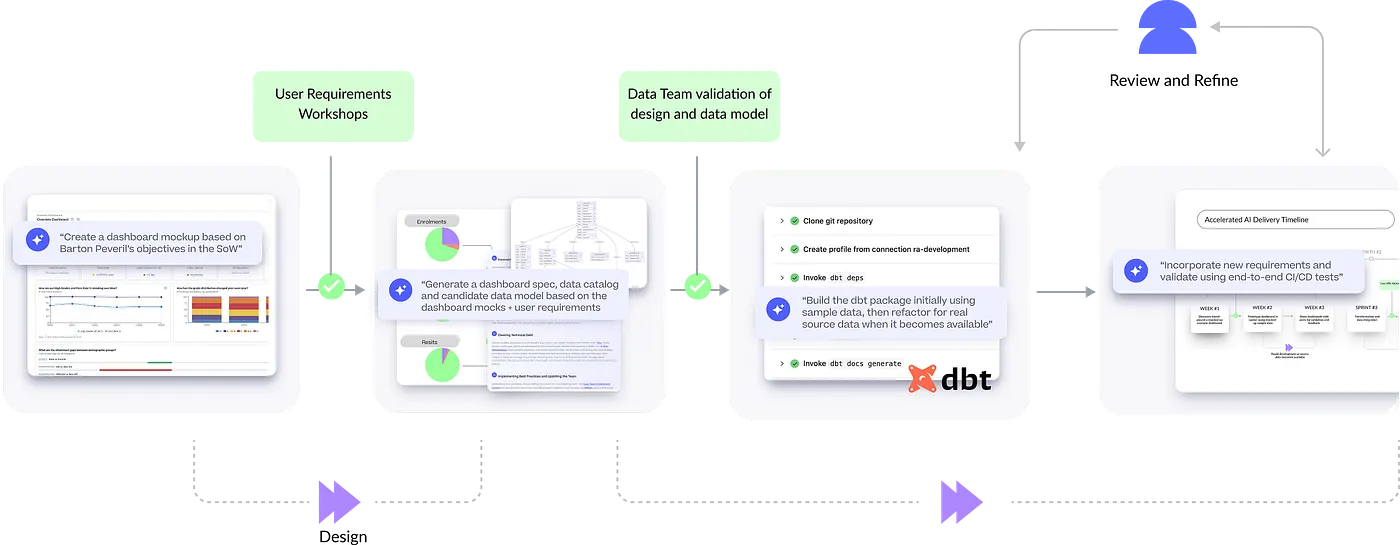

How Rittman Analytics uses AI-Augmented Project Delivery to Provide Value to Users, Faster